Difficulty |

3 |

Prerequisites |

|

Reading material |

|

def scene_at(now)

{

var lookat = Animations.animate( pos(0,0,0), pos(0,0,-15), seconds(3) )[now]

var camera = Cameras.depth_of_field( [ "eye": pos(0, 0, 5),

"look_at": lookat,

"up": vec(0, 1, 0),

"distance": 1,

"aspect_ratio": 1,

"eye_size": 0.1,

"eye_sampler": Samplers.multijittered(3) ] )

var white = Materials.uniform( [ "ambient": Colors.white() * 0.1,

"diffuse": Colors.white() * 0.8,

"specular": Colors.white(),

"specular_exponent": 20,

"reflectivity": 0,

"transparency": 0,

"refractive_index": 0 ] )

var black = Materials.uniform( [ "ambient": Colors.black(),

"diffuse": Colors.white() * 0.1,

"specular": Colors.white(),

"specular_exponent": 20,

"reflectivity": 0,

"transparency": 0,

"refractive_index": 0 ] )

var checkered = Materials.from_pattern(Patterns.checkered(1, 1), black, white)

var spheres = []

for_each([-2..5], bind(fun (i, spheres) {

spheres.push_back(translate(vec(-2,0,-i*3), sphere()))

spheres.push_back(translate(vec(2,0,-i*3), sphere()))

}, _, spheres))

var spheres_union = decorate(white, union(spheres))

var plane = decorate(checkered, translate(vec(0,-1,0), xz_plane()))

var root = union( [spheres_union, plane] )

var lights = [ Lights.omnidirectional( pos(0, 5, 5), Colors.white() ) ]

return create_scene(camera, root, lights)

}

var anim = scene_animation(scene_at, seconds(3))

var raytracer = Raytracers.v6()

var renderer = Renderers.standard( [ "width": 500,

"height": 500,

"sampler": Samplers.multijittered(2),

"ray_tracer": raytracer ] )

pipeline( anim,

[ Pipeline.animation(30),

Pipeline.renderer(renderer),

Pipeline.studio() ] )1. Explanation

The depth of field camera can be parameterized as follows:

-

The eye, a

Point3D. -

The look at point (

Point3D), which specifies what the camera is looking at. This point is also the focal point: objects around this location will be sharp, far away objects will look blurry. -

The up vector (

Vector3D). -

The distance (

double) between the eye and the canvas. -

The aspect ratio (

double) of the canvas. -

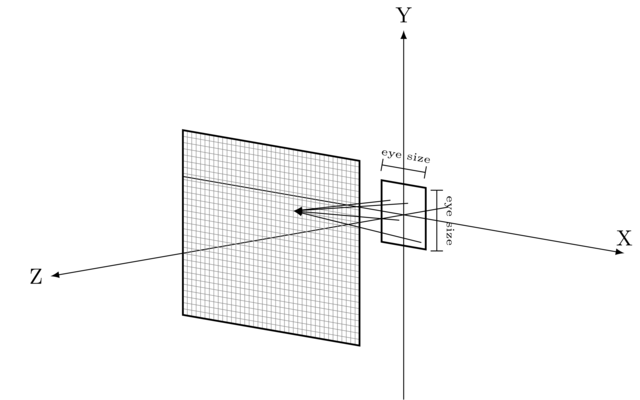

The eye size (

double). -

The eye sampler (

Sampler).

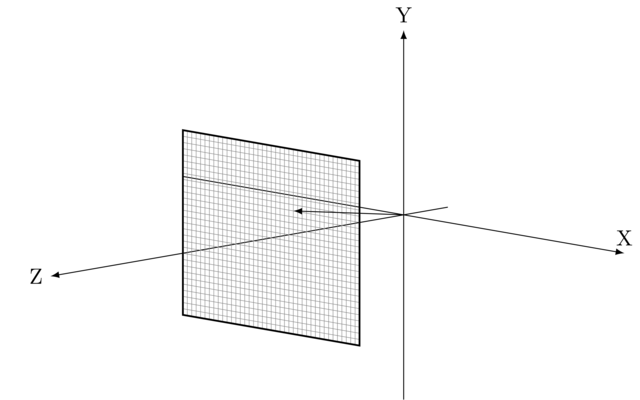

The depth of field camera can be seen as a multiple-eyed perspective camera.

Given a point on the canvas, the perspective camera only shoots one ray through it. This ray has its origin in the eye, i.e. \((0,0,0)\).

A depth of field camera’s eye is not a single point, but a square. Ideally, for a given point \(P\) on the canvas, the depth of field camera would should a ray from every point of this square through \(P\), but since there are infinitely many, this is not a realistic option. Instead, we pick a finite number of points spread across the eye area and cast rays from these, thereby hopefully approximating the ideal case.

The eye’s size is determined by the eye size parameter. The larger the eye, the more blurry out-of-focus objects will appear. We suggest a value of 0.5. The choice of points within the eye area is left to the eye sampler.

2. Implementation

Let’s start off with the definition of a DepthOfFieldPerspectiveCamera class.

|

Create new files |

The factory function is where most complexity lies. The function will create a series of "almost-canonical" perspective cameras:

-

The eye of each perspective camera is located around \((0,0,0)\). They are randomly picked by the

eye_sampler. -

The cameras are looking straight in front of them in the direction of positive Z-axis.

-

Each camera is standing straight up, i.e., not tilted in any direction. Up is up.

Placing each of the cameras in the correct position is done in a separate step using a transformation.

Applying this transformation is already implemented in DisplaceableCamera.

|

Declare the factory function |

|

Make the finishing touches.

|

3. Evaluation

|

What happens if you use a deterministic sampler (e.g., stratified sampler) vs a nondeterministic one (e.g., random sampler)? By deterministic sampler we mean a sampler that always returns the same samples when given the same rectangle. |

|

Recreate the scene shown below: |